ROBB LINDGREN

GRASP (Gesture Augmented Simulations for Supporting Explanations)

NSF Awards: 1432424

This EHR Core Research project examines how body movement supports student reasoning about critical science concepts that have unseen structures and unobservable mechanisms (e.g., molecular interactions). Specifically, the project seeks to identify types of body motion that support causal explanations for observable phenomena, what are referred to as Embodied Explanatory Expressions (EEEs). Later phases of this project are exploring whether identified EEEs can be integrated into the control structures of online simulations utilizing available motion sensing input devices (e.g., Microsoft Kinect, Leap Motion).

GRASP (Gesture Augmented Simulations for Supporting Explanations)

NSF Awards: 1432424

This EHR Core Research project examines how body movement supports student reasoning about critical science concepts that have unseen structures and unobservable mechanisms (e.g., molecular interactions). Specifically, the project seeks to identify types of body motion that support causal explanations for observable phenomena, what are referred to as Embodied Explanatory Expressions (EEEs). Later phases of this project are exploring whether identified EEEs can be integrated into the control structures of online simulations utilizing available motion sensing input devices (e.g., Microsoft Kinect, Leap Motion).

-

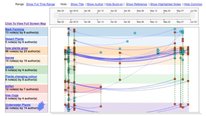

Speech-Based Learning Analytics for Collaboration

Speech-Based Learning Analytics for Collaboration

Cynthia D'Angelo

-

Cognitive and Neural Correlates of Spatial Thinking

Cognitive and Neural Correlates of Spatial Thinking

Emily Grossnickle

-

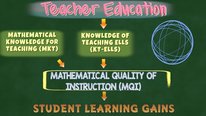

BREAKING DOWN LANGUAGE BARRIERS: MATH FOR ELLS

BREAKING DOWN LANGUAGE BARRIERS: MATH FOR ELLS

Brittany Webre

-

Connecting Idea Threads for Sustained Discourse

Connecting Idea Threads for Sustained Discourse

Jianwei Zhang

-

Accessible PhET Simulations

Accessible PhET Simulations

Emily Moore

-

Robotics and Girls' Internalized Stereotypes

Robotics and Girls' Internalized Stereotypes

Florence Sullivan

7602 Views

Continue the discussion of this presentation on the Multiplex. Go to Multiplex

7602 Views

Related videos you might be interested in...

-

Speech-Based Learning Analytics for Collaboration

Speech-Based Learning Analytics for Collaboration

Cynthia D'Angelo

-

Cognitive and Neural Correlates of Spatial Thinking

Cognitive and Neural Correlates of Spatial Thinking

Emily Grossnickle

-

BREAKING DOWN LANGUAGE BARRIERS: MATH FOR ELLS

BREAKING DOWN LANGUAGE BARRIERS: MATH FOR ELLS

Brittany Webre

-

Connecting Idea Threads for Sustained Discourse

Connecting Idea Threads for Sustained Discourse

Jianwei Zhang

-

Accessible PhET Simulations

Accessible PhET Simulations

Emily Moore

-

Robotics and Girls' Internalized Stereotypes

Robotics and Girls' Internalized Stereotypes

Florence Sullivan

William Finzer

It’s absolutely wonderful that we can begin to use gesture as an input to educational software. And this project’s plans to look at gesture with respect to causal explanation seems very promising. I love the glimpses we get in the video of users’ hands changing things on the screen! Do you have some examples of gestures that students use for causality? Do you think they are all likely to be context specific, or might they generalize somehow?

Rob Wallon

Hi William,

Three examples of student gestures about a gas pressure phenomenon can be found in a paper that I presented at NARST last month (available at http://hdl.handle.net/2142/89976 ). For all three students, their initial explanations involved gestures that were more descriptive of the phenomenon and discussed air from a macroscopic perspective. Their final explanations after using the simulation included gestures that provided causal accounts that included molecules colliding with the container.

Student gestures for our other two topics (heat transfer and seasons) were quite different from the gestures students used for gas pressure. However, I think that gas pressure and heat transfer gestures were more similar because they both involve a particulate view of matter. A reason that I think could account for this is that gestures tend to be more helpful when they are congruent with the target concepts. Perhaps some gestures could productively generalize to similar concepts, but my take is that different concepts call for the use of different gestures.

Pati Ruiz

Dean of Studies

This project is fascinating – using students own physical actions to augment existing scientific models, what a great idea! As a teacher, I’m always interested in the student’s perspective on things. What reactions did you get from the students who have participated? What do they see their role in this project as? Have they used gestures different from those that you have seen from others, like experts?

This seems like a great scaffold to help learners understand critical science concepts in a visual and more interactive way. Will the hand gestures also be included in the finished models? Or a video of hand gestures that could be associated with each concept? I wonder if that would be helpful to learners, but also to teachers in K-12 who are teaching some of these concepts to their students.

Rob Wallon

Hi Pati,

Thanks for the questions and comments! I am a research assistant on the project, and I can speak to some of your questions.

>>What reactions did you get from the students who have participated?

Most students have really enjoyed using the simulations, and many of them have expressed that they found it helpful for their thinking about the phenomena that we discussed.

>>What do they see their role in this project as?

We are fortunate to collaborate with some awesome teachers who help their students frame their participation as an opportunity to contribute to research and the development of a digital learning environment that will be used by many students in the future. We tell all students that we are interested in their ideas so that we can improve the simulations, and I think some students view that as an additional benefit of participation.

>>Have they used gestures different from those that you have seen from others, like experts?

In Year 1 of the project we interviewed students with conventional simulations and observed gestures that they naturally used. In the current Year 2 of the project we have designed the gesture interaction of the simulations based on gestures from Year 1 that were productive for student explanations. In this way some of the students of Year 1 could be considered the experts! We have not formally collected data on gestures from scientists or science teachers explaining the phenomena, but it would make for an interesting comparison.

>>Will the hand gestures also be included in the finished models?

Absolutely.

>>Or a video of hand gestures that could be associated with each concept? I wonder if that would be helpful to learners, but also to teachers in K-12 who are teaching some of these concepts to their students.

I am interested in thinking about how to support teachers with using the digital technologies and even more importantly in supporting teachers in the difficult work of attending to student thinking. I think that videos can play a role in this. Also, the next iteration of our simulations have some digital representations to help students gesture in particular ways, and I am excited for students to try them.

Pati Ruiz

Dean of Studies

Rob, thanks for the quick response! This is exciting work, it seems like the next iteration will have a lot to offer both teachers and students!

Jenna Marks

Doctoral Student in Cognitive Studies in Education

What a wonderful project! My colleague at Teachers College was experimenting with using Leap motion to help students develop understanding of tectonic plate movements, so I’ve had some experience with the tech before. I am excited about your next iteration where students have models of “correct” gestures – I think this is definitely a critical component for more complex topics.

One thing I am curious if your team has researched is how we can diagnose misconceptions through gesture. Do you program your simulations to react to both correct and incorrect representation of a concept? Have you found that students who lack full understanding of a dynamic activity gesture in predictable ways?

Robb Lindgren

Assistant Professor

Thanks Jenna for your comments and questions! I think there is a lot of potential for looking at misconceptions, or emerging ideas, in students’ gestures. Before even developing the simulations we did a lot of interviews with students to see what kinds of gestures they bring naturally to their explanations, and sometimes those gestures seemed to support ideas that run counter to the canonical understanding of the science topics. For example, in describing the causes of seasons, many students would use one hand to represent the Earth and the other to represent the Sun, and in the process they would reveal an incomplete understanding of how the Earth moves in relation to the Sun (e.g., rotation/revolution confusion, etc.). Seeing these representations in their hands was always an important clue for the interviewer and often led to changes in the questions/tasks they followed up with.

In the simulations themselves we haven’t really thought about using them to auto-detect misconceptions through their gestures, though this is certainly a possibility. Our approach instead has been to guide them towards using the kinds of gestures that have proven productive in our previous interviews with students, and we do this through the simulation interface, cuing them to use their hands to represent components of the simulation (e.g., molecules, light rays) and then showing those parts of the simulation as “activated” when the Leap successfully recognizes their hands. We of course would like to make this recognition system as flexible and adaptable as possible, potentially even letting students define the meaning of their own gestures, but we think this is a good place to start.

Thanks again for the comments and questions. Let us know if you have more thoughts!

Avron Barr

Consultant

Fascinating project and video. Thank you. Were there aspects of Leap Motion’s product that you would like to see improved? How do you imagine that new technologies like gesture recognition will find their way into the classroom?

Robb Lindgren

Assistant Professor

Hello Avron, thanks for the comment. What we like about the Leap Motion is that it’s very affordable and easy to set up. The hand tracking is pretty good, but there is definitely room for improvement—it can be a little finicky depending on the lighting and other environmental conditions. There are also some limitations of having the Leap sitting on a desk in front of the computer with the camera facing upwards; certain kinds of gestures are difficult to track even though they might be optimal for showing science concepts (it’s probably why we’re noticing that a lot of people are using the Leap on head-mounted displays rather than for traditional desktop applications). But overall it’s worked fairly well for us with the prototypes we’ve developed.

We think there is a lot of potential for gesture recognition in and around the classroom. We think this can be especially useful for simulations such as GRASP where the goal is really to get students generating explanations for other students, and the simulations are there to augment those explanations. Gesture recognition technologies have a lot of potential for supporting augmented “performances” such as giving explanations or acting something out. These performances can serve as the basis for analysis and discussion in classrooms that we believe can be quite productive.

Noel Enyedy

Hi Robb, Big fan of this work—great to see the video! So my question is when you are using gesture as a way to control the simulation (replace the sliders and whatnots), are you: a) recognizing multiple spontaneous gestures to better understand the student’s existing understanding and tailor the path through the simulation? or b) training the students to use a specific gesture that reflects some key aspect of the concept or process they are trying to learn?

For example, in your gas pressure example some students might spontaneously squeeze their hands together to talk about a smaller space and some students might waggle their fingers to talk about heat. Based on where these students are starting from I could see them taking different productive paths through your simulations. But I could also see you requiring students to use the drum-on-your-palm-with-your-fingers gesture to increase the pressure in the simulation. In this case the gesture makes visible a hidden mechanism and by training their gestures you are training their thinking.

Both seem really interesting—are you going in one of these directions? Or are you thinking of this entirely differently? Again great work, can’t wait to see more!

Noel Enyedy

I think you may have answered my question in your response to Jenna!

Robb Lindgren

Assistant Professor

Hey Noel, thanks for the comment and the support! Yes at this stage it is more approach b than approach a, although our prompts to the students aren’t quite as rigid as “do this with your hands to make X happen in the simulation.” It’s more “position your hands like this to represent light rays or molecules” and then they experiment with the simulation to figure out what that representation affords them. I’ll also add that the gestures we’ve chosen came from students themselves. The fingers tapping on the palm gesture, for example, came from one of our very first interviewees, and our approach was to see if we could get the computer to recognize what these students were doing naturally, rather than the other way around (which, as you know, is how this typically works in learning tech design).

This all said, not all students come to the table with these representations, ahem, at hand. And in this case we think using the interface to guide the student to perform particular gestures that afford certain ways of thinking can be a really powerful intervention. It’s consistent with what I’ve written about in terms of body cueing, and Mitch Nathan made a very similar argument about body action preceding understanding in his AERA talk (that you were at!) last month. I think there are probably only certain conditions under which this type approach will work, but it’s exciting that many of us are starting to investigate what those conditions are and how new designs can create them.

K. ryan

Wow – Robb this has exciting implications for Teacher Education programs… would be wonderful to introduce to pre-service teachers!

Sophie Joerg

What an innovative idea to use gestures and capturing devices in that way! Great seeing the video.

Further posting is closed as the showcase has ended.